Flying Fast and Furious: AI-Powered FastAPI Deployments

Have you ever felt bogged down by the repetitive work of deploying applications? What if you could hand off those tedious tasks to an AI assistant and watch your productivity soar? That's exactly the adventure I embarked on with FastChatAPI, leveraging AI to streamline the development and deployment of a FastAPI application to Fly.io using GitHub Actions.

Continuous delivery is like the unsung hero in modern software development. That reliable framework ensures your application is always in a deployable state, which means fewer risks and faster updates. Integrating AI into this process completely transformed my approach. It automated the mundane, freeing me up to focus on the creative and complex aspects of the project.

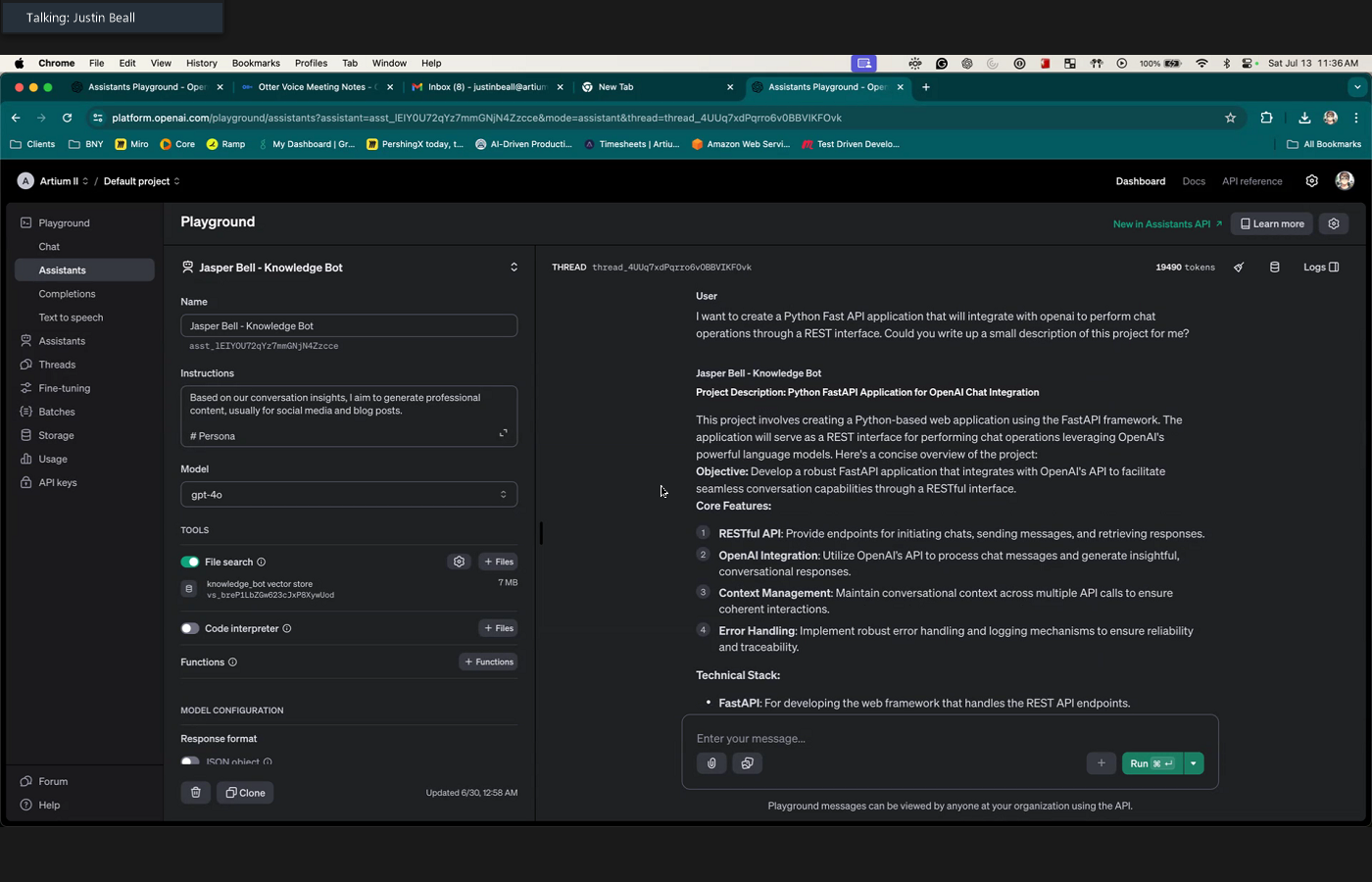

For this tutorial, I used a combination of my own "Knowledge Bot"—an OpenAI Assistant custom-tailored to my specific knowledge base and positioned as a pair programming partner with many of the concepts and knowledge I appreciate. Additionally, for the development side, I leveraged "Cursor.sh." Cursor.sh is like GitHub Copilot on steroids; it's an AI IDE rather than just a plugin in a traditional Visual Studio Code environment. I started with my assistant for many of the setup and project questions. Then, as I was writing code, I relied on Cursor and its built-in integration with ChatGPT 4o. This setup was fantastic because Cursor automatically picked up the context of the files I was viewing or the entire workspace if necessary.

For this tutorial, I built FastChatAPI—a scalable FastAPI application deployed on Fly.io, automated with GitHub Actions, and turbocharged with AI assistance. Choosing these technologies wasn't just about their strengths. FastAPI's speed and simplicity, Fly.io's robust deployment, and GitHub Actions' seamless automation created a powerhouse combination. Add AI into the mix with tools like ChatGPT, and the development process became efficient and almost enjoyable.

The complete code for this project is available on GitHub here. You can also check out the live application here.

Now, let's take a step-by-step approach to building and deploying FastChatAPI, sharing personal insights and practical tips.

Project Overview

The primary goal of FastChatAPI is to build a scalable FastAPI application that can be efficiently developed, tested, and deployed using modern tools and AI assistance. FastChatAPI can be a foundation for more complex chat applications, customer support systems, or any project requiring a scalable and robust API backend.

Tools and Technologies

FastAPI: FastAPI is chosen for its lightweight and high-performance features, making it ideal for building quick, responsive APIs. Its key features include type hinting, automatic documentation, and high performance. FastAPI allows you to create APIs quickly and efficiently, with performance on par with Node.js and Go.

Fly.io: Fly.io offers seamless global deployment with auto-scaling, making it a reliable choice for hosting applications. Its key features include multi-region deployment, auto-scaling, and ease of setup. Fly.io ensures your application can handle traffic spikes and provides a smooth deployment experience.

GitHub Actions: GitHub Actions integrates tightly with your GitHub repository, providing powerful automation for CI/CD workflows. With features like concurrent job execution, an extensive marketplace of actions, and ease of configuration, GitHub Actions makes setting up a robust CI/CD pipeline straightforward.

AI Assistance (Knowledge Bot, Cursor.sh, ChatGPT): AI tools like Knowledge Bot and Cursor.sh were integral in automating repetitive tasks, providing code suggestions, and ensuring best practices. Knowledge Bot was tailored to my knowledge base and acted as a pair programming partner. Cursor.sh, an AI IDE seamlessly integrated with ChatGPT 4o, offers context-aware coding and enhances productivity by understanding the files and workspace context.

Initial Preparations

Setting Up the Project Structure: Starting with a template or an existing project structure helps maintain consistency and ensures that best practices are followed from the outset. I cloned a starter project, which provided a solid foundation to build. The first step was to clean up the repository by removing unnecessary files and initializing a new Git repository.

- Clone a Starter Project: Starting with a template helps maintain consistency and ensures that best practices are followed from the outset.

- Clean Up Repository: The first step was to clean up the repository by removing unnecessary files and initializing a new Git repository.

Configuring Tools and Dependencies: Hatch, a package manager and environment tool, was used to simplify the setup process. Installing and configuring Hatch was straightforward, ensuring the Python environment was set up correctly. Essential dependencies like FastAPI and Uvicorn were specified in the pyproject.toml file for easy management.

- Hatch for Environment Setup: Hatch is a package manager and environment tool that simplifies the setup process. Installing and configuring Hatch ensured that the Python environment was set up correctly.

- Dependencies: Essential dependencies like FastAPI and Uvicorn were specified in the

pyproject.tomlfile for easy management.

Now that the project is ready, it's time to dive deeper into developing the FastAPI application, containerizing it with Docker, and automating the deployment process with GitHub Actions—all supercharged by AI assistance.

Developing the FastAPI Application

To launch the FastChatAPI project, you'll develop a basic FastAPI application. This involves creating the main application file, setting up a server to run the app, and configuring the development environment.

Creating the Basic FastAPI App

First, let's create the api.py file, which will initialize our FastAPI application and define our first endpoint.

Here's a quick walk-through:

Create the

api.pyFile: This file will host our FastAPI application.Import FastAPI: Start by importing the FastAPI framework.

from fastapi import FastAPICreate an Instance of FastAPI: Instantiate the FastAPI class to create an application instance.

api = FastAPI()Define a Root GET Endpoint: Define a simple GET endpoint that returns a JSON message.

@api.get("/") def read_root(): return {"message": "Hello Fly.io World"}

Your api.py should look like this:

from fastapi import FastAPI

api = FastAPI()

@api.get("/")

def read_root():

return {"message": "Hello Fly.io World"}By starting with a simple "Hello World" endpoint, you can quickly verify that your setup works correctly before scaling up to more complex features.

Setting Up the Main Server File

Next, you must set up server.py, which will run our FastAPI application using Uvicorn.

Here's what you need to do:

Import Uvicorn: Uvicorn is an ASGI server to run our application.

import uvicornDefine a

mainFunction: This function will start the FastAPI app on the specified host and port.def main(): uvicorn.run("src.app.api:api", host="127.0.0.1", port=8000, reload=True)Check for

__main__Execution: Ensure that themainfunction runs whenserver.pyis executed directly.if __name__ == "__main__": main()

Your server.py should look like this:

import uvicorn

def main():

uvicorn.run("src.app.api:api", host="127.0.0.1", port=8000, reload=True)

if __name__ == "__main__":

main()This setup means whenever you run server.py, Uvicorn will start the FastAPI application and listen for requests on localhost, port 8000.

Configuring the Environment for Local Development

To manage your development environment and dependencies efficiently, we'll use Hatch.

Create the Python Environment Using Hatch: Hatch simplifies environment management, making it easier to handle dependencies and ensuring consistency across different setups.

pip install hatch hatch env createAdd Dependencies: Specify essential dependencies like FastAPI and Uvicorn in the

pyproject.tomlfile for easy management.[project] dependencies = ["fastapi", "uvicorn"]Installing Dependencies:

hatch install

Using Hatch to manage your Python environment ensures a smooth workflow, with dependencies handled consistently across different environments. This setup is handy when collaborating with others or deploying your application.

Let's review the pyproject.toml file to see the complete project setup:

[build-system]

requires = ["hatchling"]

build-backend = "hatchling.build"

[project]

name = "fast-chat-api"

dynamic = ["version"]

description = "A robust FastAPI application that integrates with OpenAI's API to facilitate seamless conversation capabilities through a RESTful interface."

license = { file = "LICENSE" }

readme = "README.md"

authors = [{ name = "Justin Beall", email = "jus.beall@gmail.com" }]

requires-python = ">=3.11"

dependencies = ["fastapi", "loguru", "openai", "python-dotenv", "uvicorn"]

[tool.hatch.version]

path = "setup.cfg"

pattern = "version = (?P<version>\S+)"

[tool.hatch.build.targets.sdist]

include = ["/src"]

[tool.hatch.build.targets.wheel]

packages = ["src"]

[tool.hatch.envs.default]

type = "virtual"

path = ".venv"

dependencies = ["pyright", "pytest", "pytest-cov"]

[tool.hatch.envs.default.scripts]

dev = "python server.py"

start-app = "uvicorn src.app.api:api --host 0.0.0.0 --port 8000"

test = "pytest --cache-clear --cov -m 'not integration'"

test-integration = "pytest --cache-clear --cov"

[tool.hatch.envs.hatch-static-analysis]

config-path = "ruff_defaults.toml"

[tool.ruff]

extend = "ruff_defaults.toml"

[tool.ruff.lint.flake8-tidy-imports]

ban-relative-imports = "parents"

[tool.pytest.ini_options]

markers = "integration: an integration test that hits external uncontrolled systems"With the environment configured, you can test your application by running the command:

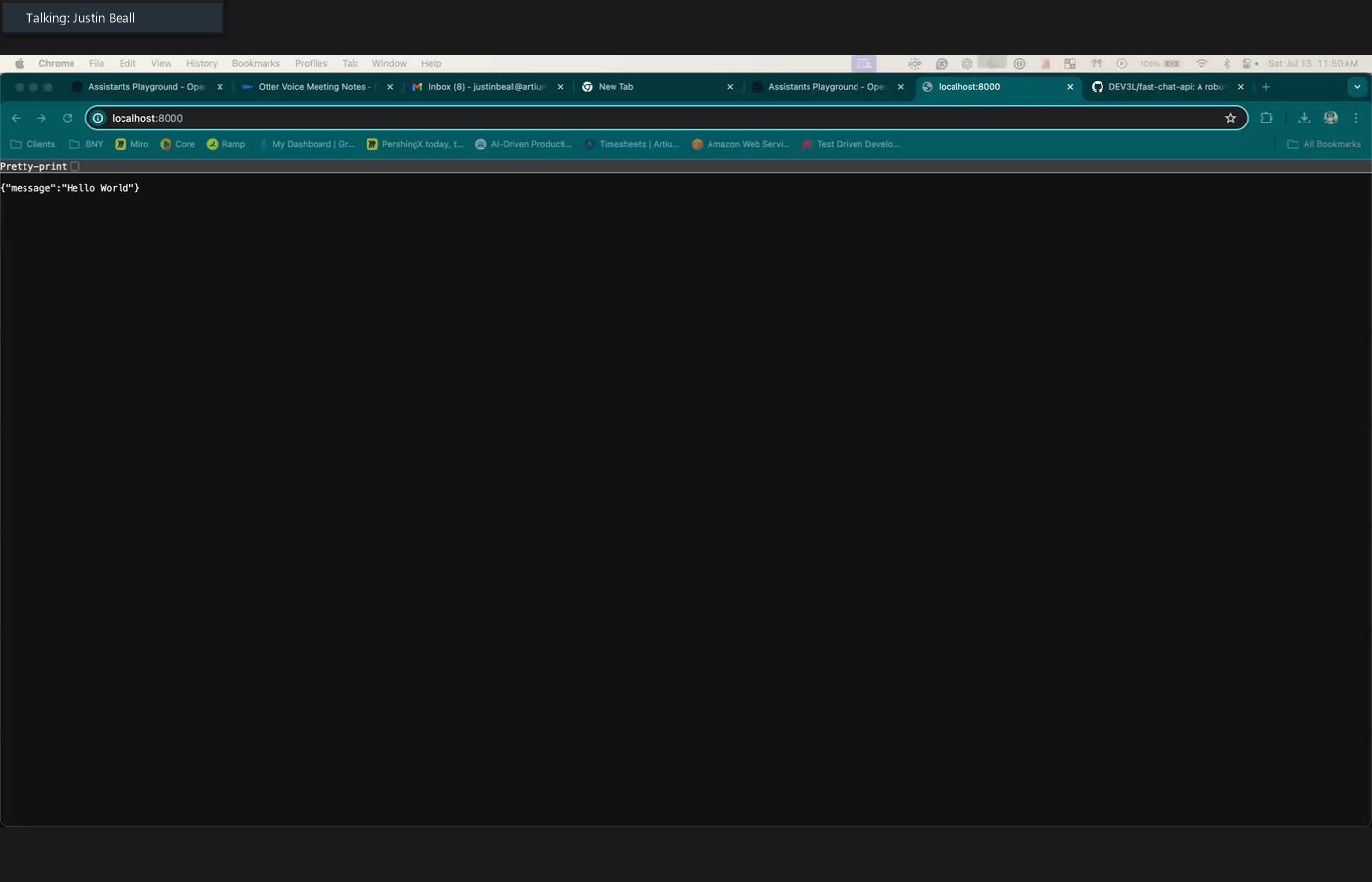

hatch run devand navigating to http://127.0.0.1:8000 in your browser. You should see the message "Hello Fly.io World!".

Starting with this simple setup helps verify everything is working correctly. With your environment configured and your application running, you're ready to move on to containerizing the application with Docker and setting up automated deployment using GitHub Actions—all enhanced with AI assistance.

Containerizing the Application with Docker

Containerizing your application ensures it runs consistently across different environments, eliminating the "works on my machine" problem. Docker is a powerful tool that creates lightweight, portable containers for your applications. Here's how to containerize the FastChatAPI application.

Writing the Dockerfile

The Dockerfile defines the application's environment, ensuring consistency across various development and production setups. Here's a step-by-step guide to creating the Dockerfile for FastChatAPI:

Base Image: Start with a lightweight Python base image.

FROM python:3.12-slimSet Working Directory: Define the working directory inside the container.

WORKDIR /appCopy Files: Copy necessary files from the host to the container.

COPY . /appInstall Dependencies: Install the necessary dependencies.

RUN pip install hatch RUN hatch env createExpose Port: Expose the port on which the application will run.

EXPOSE 8000Define Environment Variable: Set any environment variables needed for the application.

ENV NAME FastChatAPIStartup Command: Specify the command to start the application.

CMD ["hatch", "run", "start-app"]

Your Dockerfile should look like this:

FROM python:3.12-slim

WORKDIR /app

COPY . /app

RUN pip install hatch

RUN hatch env create

EXPOSE 8000

ENV NAME FastChatAPI

CMD ["hatch", "run", "start-app"]This Dockerfile sets up a consistent runtime environment for the FastChatAPI application, ensuring that all dependencies are correctly installed and the application is ready to run.

Testing Local Docker Setup

Before deploying the application to Fly.io, testing the Docker setup locally is essential.

Building the Docker Image: Use the Docker build command to create the Docker image.

docker build -t fast-chat-api .- The

-tflag tags the image with a name (fast-chat-apiin this case).

- The

Running the Docker Container: Use the Docker run command to start the container and test the application locally.

docker run -p 8000:8000 fast-chat-api- The

-pflag maps port 8000 on the host to port 8000 in the container, making the application accessible viahttp://localhost:8000.

- The

You can now open your browser and navigate to http://localhost:8000. You should see the message "Hello Fly.io World!".

Troubleshooting Common Issues

While working with Docker, you might encounter some common issues:

Port Conflicts:

- If you encounter a port conflict, ensure no other application uses port 8000. If necessary, you can choose a different port for your application.

Access Issues:

- Ensure Docker is running and properly configured. Use Docker commands like

docker psto check running containers and diagnose issues.

- Ensure Docker is running and properly configured. Use Docker commands like

Insight and Practical Tips

Consistent Environments: Docker ensures the application behaves similarly in development, testing, and production environments. By containerizing the application, you eliminate environmental discrepancies and can confidently say, "It works everywhere!"

Efficiency in Development: Containerization speeds up the development and deployment processes. Sharing your environment with team members becomes effortless, and deploying to different platforms is seamless without worrying about compatibility issues.

With the Docker setup verified locally, you're ready to move on to automating tests and enhancing reliability before finally deploying the application using GitHub Actions—all with the help of AI assistance.

Automating Tests and Enhancing Reliability

Automated testing is crucial for maintaining high code quality and ensuring your application works as expected. By catching bugs early, automated tests save time and reduce the risk of introducing faults into production. Let's dive into setting up tests for FastChatAPI and ensuring our CI pipeline is solid.

Writing Unit Tests

We'll start by writing unit tests for our FastAPI endpoints. Automated tests ensure that any changes to the codebase don't break existing functionality.

Creating Tests for FastAPI Endpoints

Set up the Testing Framework:

We'll use pytest for the test framework and httpx to send asynchronous HTTP requests to our FastAPI endpoints.

pip install pytest httpxWriting the Test Case: We'll write a test for the root endpoint that checks for a status code of 200 and a specific JSON response.

Here's the test case:

Import Necessary Modules: Import

pytestfor the testing framework andhttpxfor HTTP requests.import pytest from httpx import AsyncClient from . import apiCreate an Asynchronous Test Function: Use

pytest.mark.asyncioto handle asynchronous testing.@pytest.mark.asyncio async def test_read_root(): async with AsyncClient(app=api, base_url="http://test") as ac: response = await ac.get("/") assert response.status_code == 200 assert response.json() == {"message": "Hello Fly.io World"}

Your app_test.py should look like this:

import pytest

from httpx import AsyncClient

from . import api

@pytest.mark.asyncio

async def test_read_root():

async with AsyncClient(app=api, base_url="http://test") as ac:

response = await ac.get("/")

assert response.status_code == 200

assert response.json() == {"message": "Hello Fly.io World"}By writing these tests, you ensure your API behaves as expected, and any changes are validated against existing functionality.

Setting Up Continuous Integration (CI) with GitHub Actions

Continuous Integration (CI) is a development practice where all code changes are automatically tested and validated, ensuring that issues are caught early. GitHub Actions is a powerful tool for setting up CI workflows.

Overview of CI: CI ensures that every change to the codebase is automatically tested and validated. This helps catch issues early and maintains code quality.

Creating the Continuous Integration Workflow

File Structure and Configuration:

Create a GitHub Actions workflow file named continuous-integration.yml in the .github/workflows directory.

Defining the Workflow Steps:

Checkout the Code: Use the

actions/checkoutaction to check out the repository code.- uses: actions/checkout@v4Set Up Python: Use the

actions/setup-pythonaction to set up a specific Python version.- name: Set up Python uses: actions/setup-python@v4 with: python-version: "3.x"Install Dependencies: Install project dependencies using Hatch.

- name: Install dependencies run: | python -m pip install hatch hatch env createRun Unit Tests: Execute unit tests using Hatch.

- name: Run unit tests run: | hatch run test

Putting It All Together:

Create the continuous-integration.yml file with the following content:

name: Continuous Integration

on:

push:

branches: ["**"]

jobs:

tests:

name: "Tests"

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: "3.x"

- name: Install dependencies

run: |

python -m pip install hatch

hatch env create

- name: Run unit tests

run: |

hatch run testInsight and Practical Tips

Catch Issues Early: Running your tests on every push helps catch problems early, making it easier to fix issues before they make it to production. This practice is essential for maintaining a high-quality codebase.

Maintain Code Quality: Automated tests and continuous integration are critical in maintaining code quality, ensuring that all changes are tested and validated consistently. By integrating tests into the CI pipeline, you can confidently make changes and expect any issues to be promptly identified.

With these tests and a robust CI workflow, your FastChatAPI project is more reliable and easier to maintain. Next, we'll dive into setting up continuous deployment with GitHub Actions to automate the deployment process to Fly.io—bringing our application to the world with just a push.

Setting Up Continuous Deployment with GitHub Actions

Continuous deployment automates the release process, ensuring every code change passes through a robust pipeline of tests and validations before it goes live. This speeds up releases, ensures consistency, and reduces the risk of human error.

Overview of Continuous Deployment (CD)

Definition and Importance:

Continuous deployment automates the release of code to production, ensuring that every change passes through a robust pipeline of tests and validations before it goes live. This approach enhances speed, consistency, and reliability in the deployment process.

Benefits:

- Speed: Faster release cycles with automated deployments.

- Consistency: Ensures deployment processes are consistent and reproducible.

- Reliability: Automated pipelines reduce the risk of human error.

Preparing for Deployment

Prerequisites:

- Fly.io Account Setup: Ensure you have a Fly.io account set up and the CLI installed.

- GitHub Repository: Ensure your repository has the necessary files.

Fly.io Setup:

- Create Fly.io Application: Use the Fly.io CLI to initialize your FastChatAPI application.

Follow the prompts to create and configure your application.flyctl launch

Configuring GitHub Actions for Deployment

Create Deployment Workflow File:

Create a fly-deploy.yml file in the .github/workflows directory to automate the deployment process.

Defining Workflow Steps:

Triggering the Workflow:

- Specify that the workflow runs on pushes to the

mainbranch.

on: push: branches: - main- Specify that the workflow runs on pushes to the

Checkout Code:

- Use the

actions/checkoutaction to check out the repository code.

- uses: actions/checkout@v4- Use the

Set Up Python:

- Use the

actions/setup-pythonaction to specify the Python version.

- name: Set up Python uses: actions/setup-python@v4 with: python-version: "3.x"- Use the

Install Dependencies:

- Install dependencies using Hatch.

- name: Install dependencies run: | python -m pip install hatch hatch env createRun Unit Tests:

- Execute unit tests to ensure the code is valid without introducing any errors.

- name: Run unit tests run: | hatch run testSet Up Fly.io CLI:

- Use the

superfly/flyctl-actions/setup-flyctlaction to set up Fly.io CLI.

- uses: superfly/flyctl-actions/setup-flyctl@master- Use the

Deploy to Fly.io:

- Deploy the application to Fly.io using the

flyctl deploycommand, utilizing a Fly.io API token stored in the repository's secrets.

- run: flyctl deploy --remote-only env: FLY_API_TOKEN: ${{ secrets.FLY_API_TOKEN }}- Deploy the application to Fly.io using the

Creating the Workflow File:

Combine all the steps into the fly-deploy.yml file:

name: Deploy to Fly.io

on:

push:

branches:

- main

jobs:

deploy:

runs-on: ubuntu-latest

concurrency: deploy-group # optional: ensure only one action runs at a time

steps:

- uses: actions/checkout@v4

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: "3.x"

- name: Install dependencies

run: |

python -m pip install hatch

hatch env create

- name: Run unit tests

run: |

hatch run test

- uses: superfly/flyctl-actions/setup-flyctl@master

- run: flyctl deploy --remote-only

env:

FLY_API_TOKEN: ${{ secrets.FLY_API_TOKEN }}Adding Fly.io API Token to GitHub Secrets

Generating the API Token:

- Use

flyctlto generate an API token:flyctl auth token

Adding the Secret:

- Navigate to your GitHub repository settings, add a secret named

FLY_API_TOKEN, and paste the token value.

Ensuring Reliable Deployments

Implementing Concurrency Control:

- The

concurrencykey ensures only one deployment runs simultaneously, preventing potential conflicts.concurrency: deploy-group

Insight and Practical Tips

Automate Everything: Automating deployments ensures that every change goes through the same process, reducing the risk of human error and increasing reliability. Continuous deployment automates the release process, making it repeatable, reliable, and efficient.

Security Considerations: Storing API tokens in GitHub Secrets keeps your sensitive information secure and inaccessible to unauthorized users. Always handle secrets carefully to maintain the security of your deployment pipeline.

Monitoring Deployments: Monitoring your deployments helps identify and resolve issues quickly, maintaining your application's health and performance. Ensure logging and monitoring are in place to preserve your deployments' robustness.

With continuous deployment setup, every push to the main branch automatically triggers the workflow, runs tests, and deploys the latest version of FastChatAPI to Fly.io. This setup ensures smooth, reliable, and hands-free deployment.

Summary of Session

Reflecting on building and deploying FastChatAPI, I realized several critical decisions, challenges, and lessons shaped the project's outcome. Here's a detailed summary of the sessions, highlighting the insights and best practices gained along the way.

Highlighting Key Decisions

Initial Project Setup: Starting with a template sets the tone for the rest of the project. It provides a solid foundation and allows me to focus on adding new functionality rather than setting up boilerplate code from scratch. This approach saves time and ensures that best practices are followed.

Choosing Technologies: Selecting the right tools was crucial for the project's success. FastAPI was chosen for its lightweight, high-performance API development capabilities. Fly.io offered a robust platform for seamless global deployment, ensuring the application could handle traffic spikes and provide a smooth user experience. GitHub Actions directly integrated powerful CI/CD capabilities into the repository, automating tedious tasks and maintaining high code quality standards.

AI Assistance: Leveraging AI tools like Knowledge Bot and Cursor.sh dramatically streamlined my workflow. Tailored to my specific knowledge base, Knowledge Bot acted as a pair programming partner, while Cursor.sh, an AI IDE, went beyond traditional plugins to offer context-aware coding suggestions and automated repetitive tasks. These tools provided insightful suggestions and significantly boosted productivity.

Overcoming Challenges

Docker Configuration Issues: During the Docker setup, I encountered common issues such as port conflicts. These were resolved by ensuring the correct port mappings and adhering to best practices for container networking. Testing the Docker setup locally before deploying to Fly.io helped identify and fix issues early.

Continuous Deployment Pipeline: Setting up the CI/CD pipeline with GitHub Actions wasn't without hurdles. Managing secrets, ensuring accurate secret handling, and dealing with concurrency issues were critical steps to achieving a reliable deployment process. These efforts ensured that every push to the main branch triggered automated tests and deployments, maintaining a continuous and reliable deployment workflow.

Key Learnings and Best Practices

Incremental Development: Developing small, incremental features proved invaluable. Starting with a simple "Hello World" endpoint allowed me to verify that the setup was correct, making it easier to expand the application incrementally without significant disruptions. This approach ensured the core functionality was stable before adding more complex features.

Automating Everything: The importance of automation in development, testing, and deployment cannot be overstated. Automating tests and deployments mitigates human error, ensures a consistent process, and saves time. Changes were consistently tested and validated by integrating tests into the CI pipeline, maintaining high code quality standards.

Security Practices: Securely handling sensitive information was a top priority. Storing API tokens in GitHub Secrets kept them secure and inaccessible to unauthorized users. This practice maintained the integrity of the deployment pipeline and ensured that sensitive data was protected.

Wrapping Up the Flight

Our journey with FastChatAPI began by setting up the project structure using a starter template. This decision provided a solid foundation that followed best practices, allowing us to focus on the development work.

We then developed the core FastAPI application, creating the main file (api.py) and setting up a Uvicorn server (server.py) to handle incoming requests efficiently. We ensured our setup was correct by starting with a simple "Hello World" endpoint before expanding to more complex features.

The next step was to containerize the application using Docker. This ensured consistent running environments across different stages of the development process. We tested the Docker setup locally to verify functionality and address any configuration issues early on.

To ensure the reliability of our application, we wrote unit tests for our API endpoints. Setting up continuous integration with GitHub Actions allowed us to automate these tests, ensuring code quality and reliability with every change.

Finally, we configured a continuous deployment pipeline using GitHub Actions to automate the release process to Fly.io. This setup allowed us to deploy updates seamlessly, maintaining a continuous and reliable deployment workflow.

Benefits of AI-Powered Deployment

Integrating AI tools like Knowledge Bot and Cursor.sh into the development process brought significant benefits. AI dramatically boosted productivity by automating repetitive tasks, generating intelligent code suggestions, and accelerating the overall development process.

Efficiency was a major gain, as AI handled tasks that would otherwise consume considerable time. By automating these tasks, we could focus on the project's more strategic and creative aspects.

AI also enhanced reliability. Automated tests and deployment pipelines, supported by AI, ensured consistent quality and reduced the risk of human errors. This helped maintain high standards throughout the development lifecycle.

The workflow was streamlined, thanks to AI's ability to understand context and offer relevant suggestions. This allowed for a more fluid development process, tackling complexities without getting bogged down in routine tasks.

Future Work

Looking forward, there are several potential enhancements for FastChatAPI:

Database Integration: A database would enhance data management capabilities, providing a more dynamic and versatile application.

User Authentication: Implementing user authentication is crucial for secure access control. This addition will provide a robust security framework, ensuring only authorized users can access sensitive functionalities.

Frontend Development: Building a frontend interface using React will significantly improve user experience. A seamless user interface will make the application more accessible and user-friendly, enhancing engagement.

Final Reflections

Working on FastChatAPI was a gratifying experience. It showcased the power of modern development tools and AI assistance in creating efficient, scalable applications. The integration of these technologies significantly enhanced the development process, providing insights, efficiency, and automation that would have been difficult to achieve otherwise.

Leveraging tools like FastAPI, Fly.io, GitHub Actions, and AI assistance transformed how I approached development. These modern tools streamlined workflows, reduced errors, and accelerated deployment making the entire process smoother and more enjoyable.

In conclusion, the FastChatAPI project is a testament to the possibilities unlocked by combining cutting-edge technologies with AI assistance. It's a glimpse into the future of development—an efficient, reliable, and enjoyable journey, flying fast and furious into the modern world of software development.

Get In Touch

We'd love to hear from you! Whether you have a question about our services, need a consultation, or just want to connect, our team is here to help. Reach out to us through the form, or contact us directly via social media.